To keep doing RL research, stop calling yourself an RL researcher

On the role of RL researchers in the era of LLM agents.

An irrepressible, uncontainable, uncontrolled stream of papers proposing the use of pretrained Large Language Models (LLMs) for sequential decision-making is manifesting itself. Armed with hand-crafted representations of the world and pages and pages of carefully-engineered prompts, LLMs seem to show unprecedented abilities that allow them to excel at Minecraft, play games by reading manuals, and solve quite complex computer tasks. There is currently no sane way to compare these approaches one to the other, or to previous work. But it really doesn’t matter. It’s a gold rush; it’s a wild west. An entire research community is eager to show that LLMs are better than traditional reinforcement learning methods at performing those tasks.

As a reinforcement learning researcher, it seems easy for you to dismiss all of this. It’s just an unfortunate excursus stemming from another community, a horrifying deep learning flex, a tinkering-based abomination, you may say. It looks to you like another, alien, field, in which you can see no value functions, no temporal difference learning, no policy gradient. But, what if. What if, paper after paper, hack after hack, LLMs start to seem an overall more promising approach to solving complex tasks? What if they become better than your beloved DQN at solving the problems you care about? To your eyes, that could be like declaring yourself and your RL community defeated.

Sutton & Barto’s book on RL says that it is “simultaneously a problem, a class of solution methods that work well on the problem, and the field that studies this problem and its solution methods”. However, despite there being conceptually no constraint on that, when another researcher is presented to you as an RL researcher at a conference, your first reaction is likely to think about which kind of value function, temporal difference learning or policy gradients research that person might be doing. It’s a strong, unconscious, kind of association, the one that makes you see yourself as a reinforcement learning researcher and the same one that makes you see those LLM papers as something coming from a different research field.

If you talk to RL researchers all over the world and ask about the original motivation that got them into the field, you’ll quickly learn that no one was specifically looking forward to dealing with value functions, understanding the dynamics of temporal difference learning, or estimating policy gradients, per se. In one form or another, the shared high-level research goal among all of these researchers is creating agents that are able to interact with an environment to solve sequential decision-making problems, and to understand the science behind these agents. Is it really important if you bootstrap the required knowledge from datasets scraped from the internet? If a planning procedure sounds like an innocent “let’s think step by step” with no theoretical guarantees? If in addition to the space of vectors of successor representations, an agent can reason in the natural language space?

These facts do not seem to change the intimate nature of the research questions that RL folks like to investigate. After all, again Sutton & Barto’s book on RL says that “trial-and-error search and delayed reward are the two most important distinguishing features of reinforcement learning”. On an abstract level, you've not only been doing research on temporal difference or policy gradients, you’ve been doing research on credit assignment; you’ve not only been doing research on special representations, you’ve been doing research on generalization in sequential decision-making; you’ve not only been doing research on exploration bonuses, you’ve been doing research on automated data collection. Any successful LLM-based approach applied to sequential decision-making will have to deal with all of those challenges, in one way or another. It will have to face them to learn from interactions, regardless of the explicit use of temporal difference, value functions and, yes, even of scalar reward signals.

This apparently advantageous and exciting situation implies nonetheless new perils: the reinforcement learning community is on the edge of a brand crisis. Many people will have the constant temptation to associate the RL researcher with an expert on the tools developed during the last decades, instead of a master of the fundamental research questions behind them. Among these people, it is likely that many RL researchers will try to set by themselves a boundary between “proper RL research” and a growing body of work based on the usage of LLMs as tools. But if this separation becomes widely accepted and LLM-based approaches reveal themselves as promising as they seem to be, the brand of reinforcement learning will be deeply damaged. Researchers trying to answer the fundamental questions in the space will have trouble getting funding, jobs, or simply recognition, just because those questions will sound detached from the tools that are maximally useful in practice.

Here’s one suggestion: since the name “reinforcement learning” is at this moment in time so tight to a specific set of techniques, we can try to associate ourselves with other words to the problem we’re trying to solve and to the fundamental research questions related to it. We could say we work on learning agents for sequential decision-making or on interactive autonomous learning; pick the name you prefer, it’s not the important point. But make sure that name makes clear without any doubt that the research problem deals with an agent that learns or plans by interacting with an environment, even if the agent uses an LLM as the main building block.

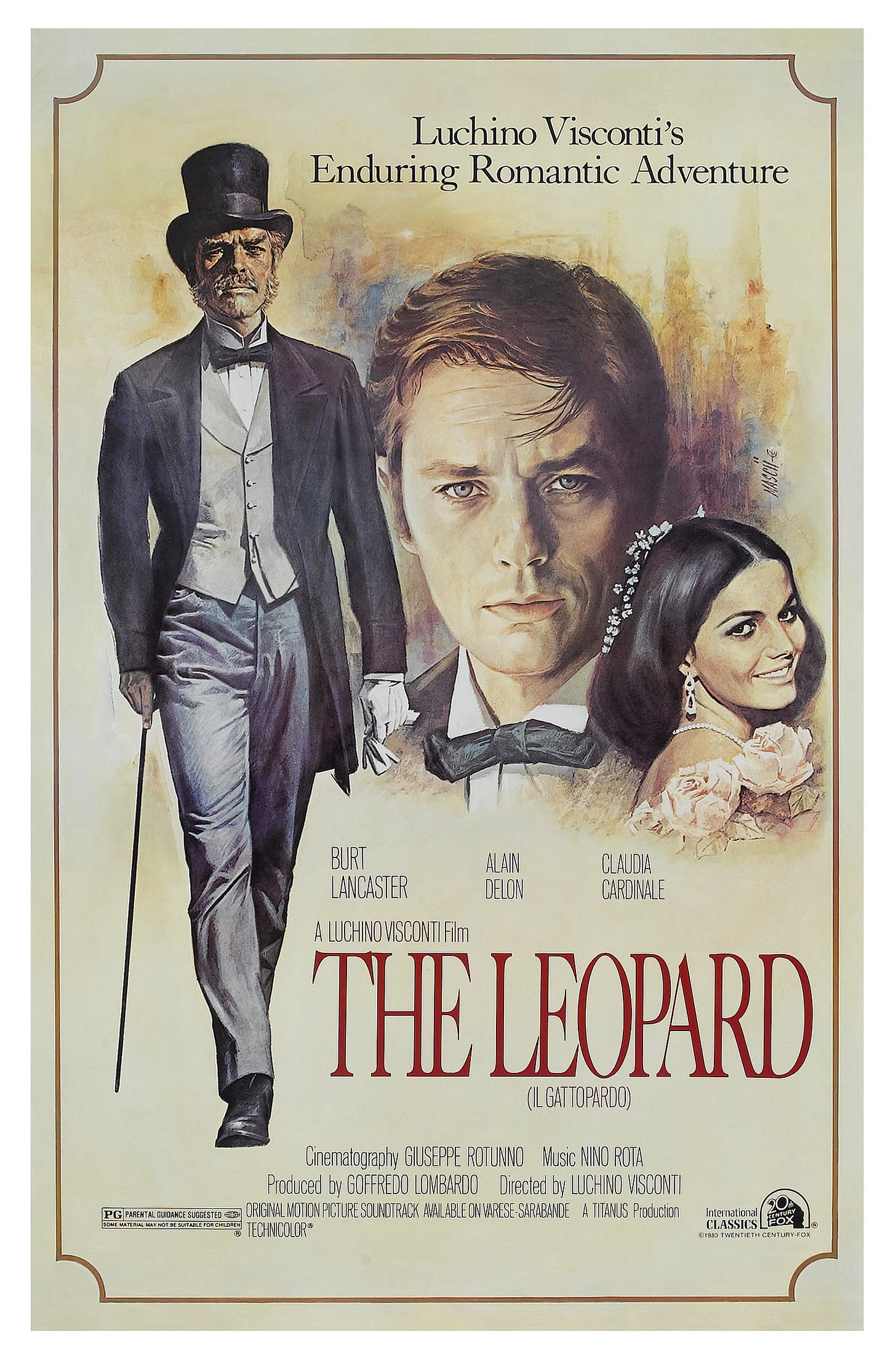

There’s a famous motto in The Leopard, one of the most important Sicilian novels: “Everything must change for everything to remain the same”. It may seem extreme, but to keep doing RL research, stop calling yourself an RL researcher. Then, go on with asking and answering the fundamental research questions that brought you to RL.

This is a great piece - I hope you keep writing. I am personally going to fight to keep the name RL because it's the only term that connects multiple things in my mind: time/feedback and reward. It's important.

I wrote about the identity crisis from a different way, here, saying there are three metaphors for RL: https://www.interconnects.ai/p/rl-tool-or-framework-or-agi